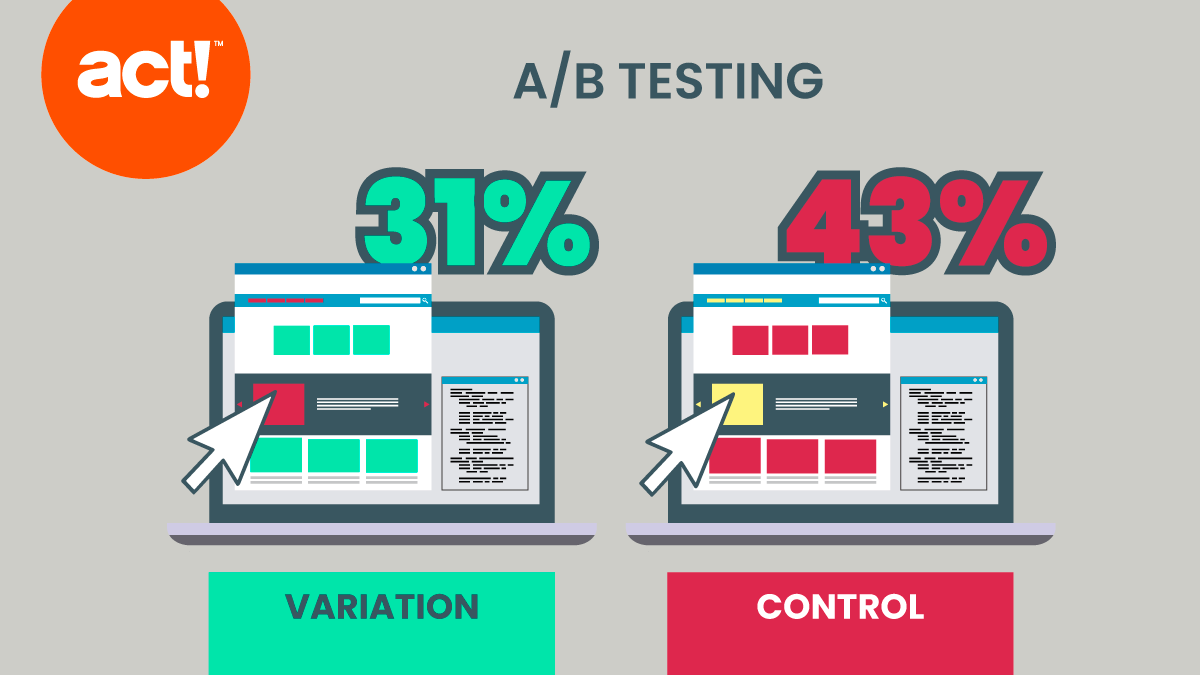

A/B testing, or as it is sometimes referred to, split testing or bucket testing, is a marketing method that involves implementing a piece of content twice, one as a ‘control’ and another time with a variation.

The purpose of this is to analyze the results following the release of the content and assess which performs better in terms of your overall goal. An example of this would be releasing a marketing email with two different headlines then tracking the analytics of click rate and seeing how many people opened one email compared to the other.

A/B testing takes a lot of the guesswork out of marketing and can potentially lead to the success of certain campaigns or products. Throughout this article, we will cover the basics of A/B testing, why you should use it, how to avoid A/B testing mistakes and how to actually implement A/B testing into your marketing.

How A/B testing works

A/B testing in its simplest form involves releasing two versions of the same content with a slight variation to test your audience’s response. One half will be shown the original A version, which is referred to as the ‘control’, whilst the other half will be shown the B version, also known as the ‘variation’.

By utilizing the corresponding analytics from the content, you are able to make a direct comparison of your A/B testing. It could be that B presented an increase in clicks, that it had fewer clicks than A or that they were relatively similar. Regardless, you learn more about your audience and their habits. This is particularly useful when it comes to landing pages for websites or apps. Using the data, you can select the most efficient version of your page and move forward with it.

Why should you use A/B testing?

Continuous A/B testing can drastically increase the effectiveness of your marketing campaigns. When played out over a period of time, you can see exactly which way your audience is moving and adapt all future content to follow. It also carries less risk than making alterations to existing content as that could lead to a temporary loss in engagement or even sales. Whereas A/B testing would maintain half of your audience with the original.

By then using the ‘winner’ of the first A/B test in further testing, you can implement a ‘survival of the fittest’ methodology and constantly seek out the strongest landing page or call to action. This highly focused strategy reduces risk while greatly expanding the potential for an increase in your business goals.

When does A/B testing work well?

An example of an A/B success story takes Electronic Arts, a well-known video game production company that, on the release of their new SimCity game, wanted to ensure that their webpage would optimize sales.

The A (control version) offered a 20% discount to any future purchases made with EA whilst the B (variation) offered a pre-order bonus which included additional items and options within the game. The final result informed EA that the B variation performed 40% better than the A control. The hypothesis they created based on the data was that fans of the game series were typically die-hard fans and less interested in other games. A game such as SimCity typically involves large investments of time and as such, the pre-order bonus was of far more value to them.

Whilst the EA team could have made this assumption on their own, it would have been a gamble and they could just have easily falsely assumed that players would want to buy their other games too. This room for human error is why A/B testing is so important, however, this only applies if the hypothesis made from the data is correct.

Problems with A/B testing that you should avoid

A/B testing, as with any marketing strategy, has room for mistakes and poor choices. These are typically down to human error. Here are several things to consider when A/B testing to make the best out of it.

Careful consideration must be taken when analyzing the results and forming a hypothesis, whilst a single test might lead to one conclusion, another might go in a different direction and form a different result entirely. It is important to conduct ongoing tests and take a critical look at the analytics to form the most accurate opinion.

Whilst it might seem like an excellent idea to A/B test all of your pages or different marketing material across your business, this will more than likely lead to confusing results. The beauty of A/B testing is the slight variant allowing you to see which is more effective. As soon as you enter multiple tests into the mix, you open up the opportunity of misunderstanding or conflicting data. Experts suggest no more than four tests at one time, for a small business we recommend one.

Another way where A/B testing can fall off is if the tests are either run for too short a time or to too small an audience. VWO’s A/B significance calculator is a good place to start as it informs you as to whether or not you have ample data to create an accurate conclusion from the test.

Take the next step

Before A/B testing, you need to find a platform that will allow you to do this with ease and accuracy. Whilst some popular platforms such as MailChimp allow you to A/B test your email marketing, you may prefer a more advanced, easy-to-use platform. With Act! you can A/B test your marketing emails with optimal precision and accuracy, giving you the best quality results and return.

A/B testing, when compared to other marketing strategies, proves less risky and over time has the potential to drastically increase sales, conversions, engagement, or brand awareness. Act! can take the weight of responsibility off your desk and help you to achieve these results.